I’ve been looking at backing up some data from an old hard drive recently and would like to compress it to use less CDs. Normally I’d just use GZIP for compression but a friend of mine swears by BZIP2. Knowing that my Linux distro sports at least 4 different compression tools “out of the box”, I thought it’s time to get some numbers. Bring on Compression Wars!

The idea’s simple. Gather together a variety of compression tools, test them head-to-head against a variety of file types and see how the perform. There needs to be a few different types of file types involved as certain files compress easier than others. For example, text files should compress alot more than video due to the fact that video codecs already contain compression algorithms.

The sort of things I’m backing up are: Music (mainly MP3s), Pictures (mainly JPEGs), Videos (a mixture of MPEGs and AVIs / DIVXs), and Software (Both in the form of binary files and source code). I have therefore split the test into the following categories:

Binaries

- 6,092,800 Bytes taken from my /usr/bin director

Text Files

- 43,100,160 Bytes taken from kernel source at /usr/src/linux-headers-2.6.28-15

MP3s

- 191,283,200 Bytes, a random selection of MP3s

JPEGs

- 266,803,200 Bytes, a random selection of JPEG photos

MPEG

- 432,240,640 Bytes, a random MPEG encoded video

AVI

- 734,627,840 Bytes, a random AVI encoded video

I have tarred each category so that each test is only performed on one file (As far as I’m aware, tarring the files will not affect the compression tests). Each test has been run from a script 10 times and an average has been taken to make the results as fair as possible. The things I’m interested in here are compression and speed. How much smaller are the compressed files and how long do I have to wait for them to compress / decompress.

Although there are many compression tools available, I decided to use the 5 that I consider the most common. GZIP, BZIP2, ZIP, LZMA, and the Linux tool Compress. One of the reasons for this test is to find the best compression, so where there was an option, I have chosen to use the most aggressive compression offered by each tool.

Here’s what I found:

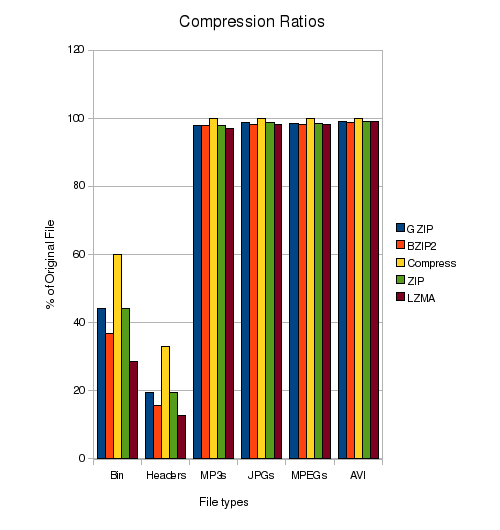

This graph show the size of the compressed file as a percentage of the original, so the smaller, the better.

So it seems BZIP2 does outperform GZIP all round and the lesser used LZMA does even more so! I was quite surprised how well the binaries compressed but the results for the {MP3, JPG, MPEG, AVI} collection of files shows that there is little to no point of trying to compress these formats, they are already pretty optimal. Something else interesting was with the Compress tool (nCompress). Using the {MP3, JPG, MPEG, AVI} formats, it churned away for a while trying to squash these then, without producing an error, exited leaving only the original file. I presume that the compressed versions were larger than the originals and the is some logic stating “If output_size > input_size; return input”. The clear winner here though is LZMA.

So now we know how will these tools compress, how long do they take to function?

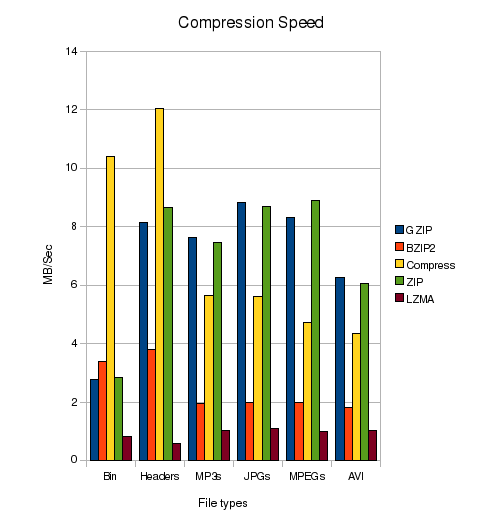

Notice that these speeds are in MB / Second, so the larger, the better. Although Compress is the worst at its job for compression, it’s great if you’ve got a lot of files and are in a rush. and GZIP and ZIP competing pretty much neck and neck.As good as LZMA is at it’s job though, it likes to take it’s time about things running at just 1MB/Sec or less.

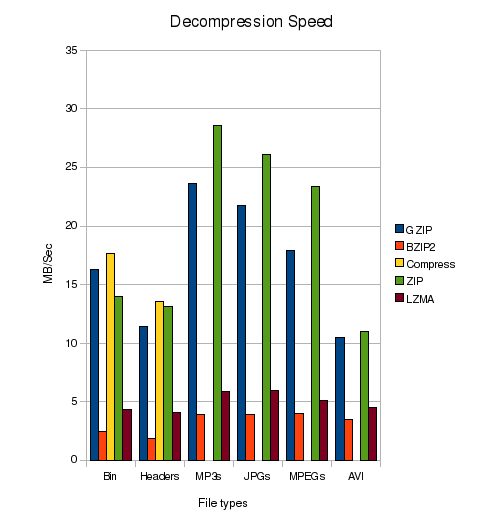

What about decompression. If I’m uploading some large files to my webserver for people to download, I might not mind waiting an hour to compress them really small to save on bandwidth, but I don’t want to do this at the expense of my users. could you imagine, “click here to download, then wait 4 hours for it to decompress…”. Well here’s the results:

Again speeds here are in MB / Second (Size of the compress file over time), so the larger the better. My Friend was right before to state that BZIP2 offers better compression than GZIP, but, as shown here, at the expense of the user’s time, with BZIP2 taking over 6 times longer than GZIP to decompress things. That’s the difference between waiting 10 minutes or an hour for the same files to decompress! Even the mighty LZMA is sometimes nearly twice as fast as BZIP2.

Test Bed

Just to put these tests into perspective for you, the machine I ran these on has a 3.2GHz P4 with 1GB of RAM. I’m sure it would run better on newer machines but I believe the results are a good indication or the ability of these tools.

Conclusion

The graphs speak for themselves really. If you need heavy compression and are willing to wait for it, use LZMA. If you want to squash things a little, but don’t have much time, GZIP and ZIP will work just fine. Compress (or nCompress) though, seems to be pretty useless for all but the really impatient.

Further Work

There are some further tests I’d like to perform with these tools. I think it would be interesting to see how they perform with different size files of the same type. I believe that the larger the file, the better the compression will be, and this will be good to test to see if it’s better to tar all of my backup before compressing it.

Some of these tools are also available in parallel versions and that will be interesting to run on my little 7 node cluster.

There are a mass of other tools available for compression. Some of which are reportedly extremely efficient i.e. PAQ8. These would be interesting to try too, but I hope you agree, the tools I chose are probably the most common and the tests provide interesting results for you to make a more informed decision about which to use.

Happy Compressing!

来源:http://bashitout.com/2009/08/30/Linux-Compression-Comparison-GZIP-vs-BZIP2-vs-LZMA-vs-ZIP-vs-Compress.html

结论:

大多数情况下,就是在 zip/gz 和 7z 之间二选一。